If you are conducting systematic literature searches you are very likely to run searchers in online databases. How well these searches capture relevant literature?

In our recent paper (Foo et al. 2021) we explained the systematic review process, from question formulation to screening the retrieved records, but we only briefly mentioned the need for checking the performance of database searches. There is very little practical guidance available on how to do this and there is also some confusion about the relevant terminology.

To fill this gap, I will explain what benchmarking is and how it can be done using Scopus database search as an example. There are some more complicated ways of benchmarking, esp. in computational projects, but I leave them for another time, as usually simple benchmarking will be sufficient (unless you get a very picky manuscript reviewer).

Why Scopus?

Scopus has a broad and interdisciplinary coverage, user-friendly interface with advanced search functionality. For these reasons, Scopus is our database of choice for performing mains searches. We also use Web of Science (Web of Knowledge), which is quite similar, and techniques presented in this blog will apply there too, with minor modifications.

What is benchmarking?

Its main function is to check if your search is able to find relevant papers indexed (i.e. available as records) in a given database. You do not know in advance all the relevant records in the database until you check ALL of the records there against your inclusion criteria (and this is obviously impossible). So, checking is usually done against a pre-determined set of relevant publications. We call this set "banchmarks" or "benchmarking set", but other names used for these are "validation set" or "test set".

How to get a benchmarking set of papers?

We would usually collect relevant papers from various sources - relevant reviews on the topic, Google Scholar, ConnectedPapers, CiteHero, etc. We pick a set of minimum 10 papers, and collect their full references (bibliographic records), including DOI.

How to quickly benchmark in Scopus?

I use an example based on our recent protocol of a systematic map (Vendl et al. 2021 - accepted preprint link). The benchmark set we used consists of 10 papers.

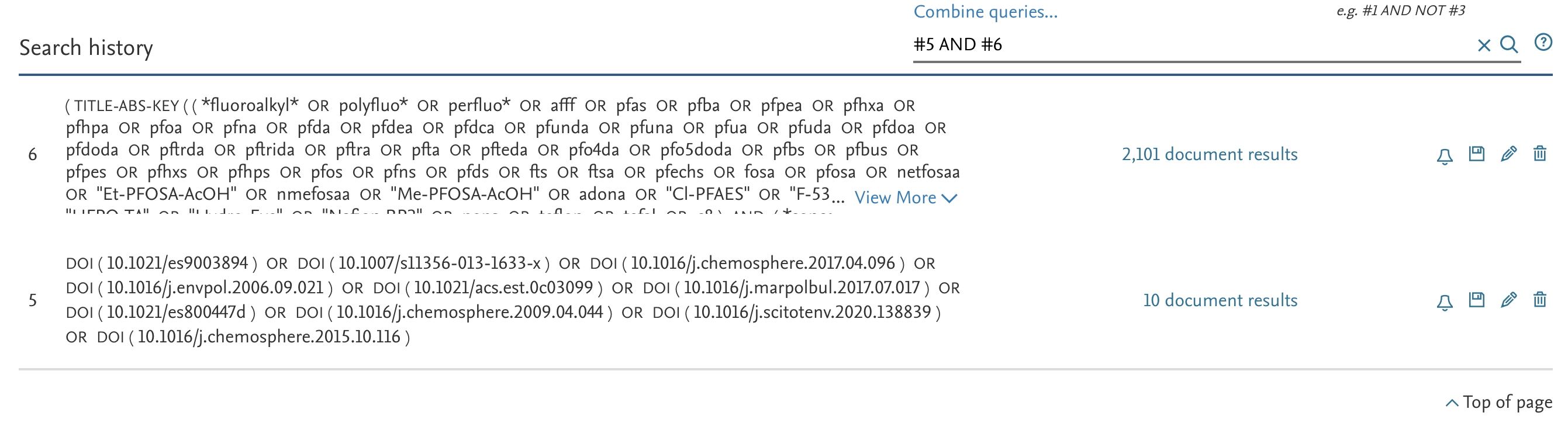

All steps are performed on Scopus using "Advanced document search", which allows us to use complex custom search strings but also has a useful feature of tracking history of recent searches (as a table at the bottom of the search page).

Here is the process in seven simple steps, with screenshots:

1.

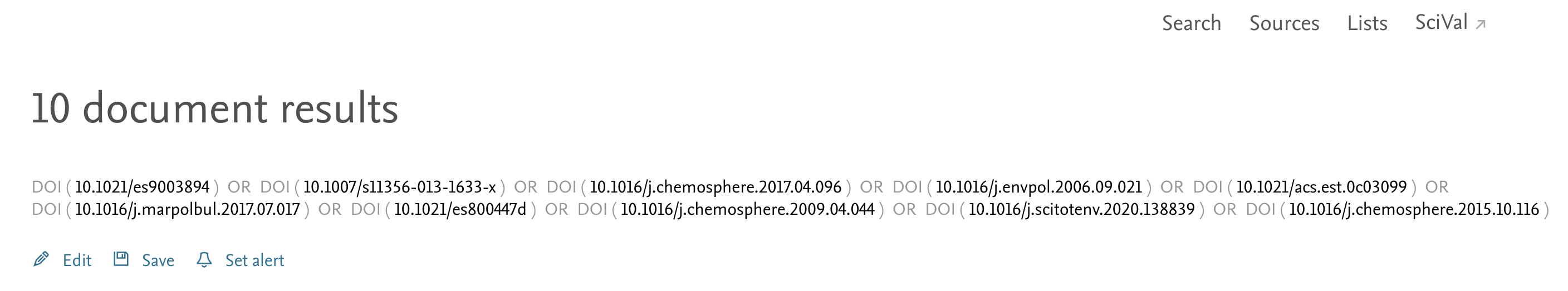

Find your benchmark papers on Scopus using a search string made of paper's doi (search strings based on titles are longer and less accurate):

This should find all benchmark papers that are indexed in the database (unless associated doi is wrong, which sometimes happens). If some papers do not get picked up, search for them individually using title, then author or citing papers, get their "correct" doi or other identification code. In the example, all benchmark papers were found:

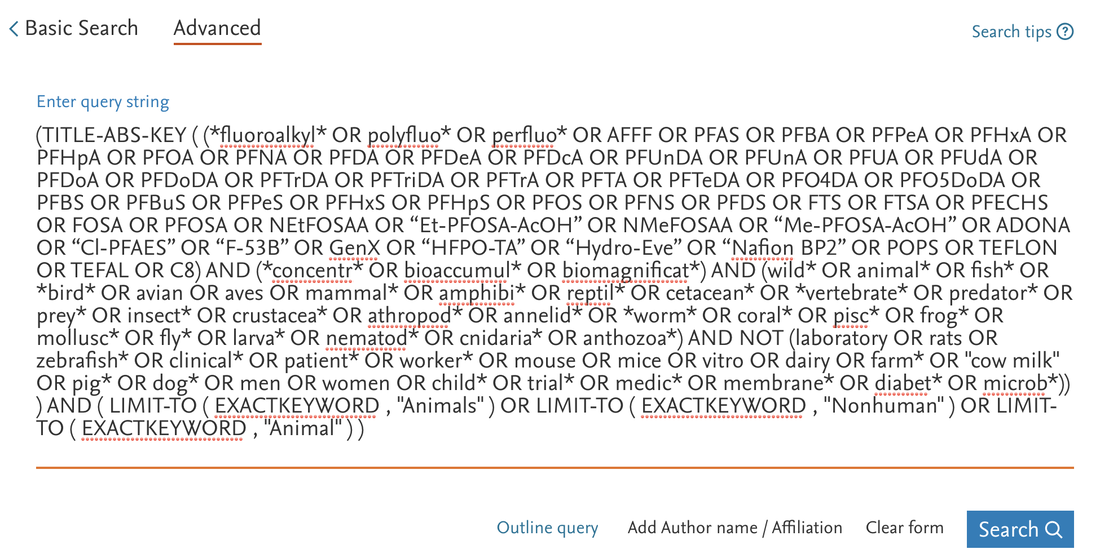

Now run the search you would like to be validated against the benchmark set, e.g.:

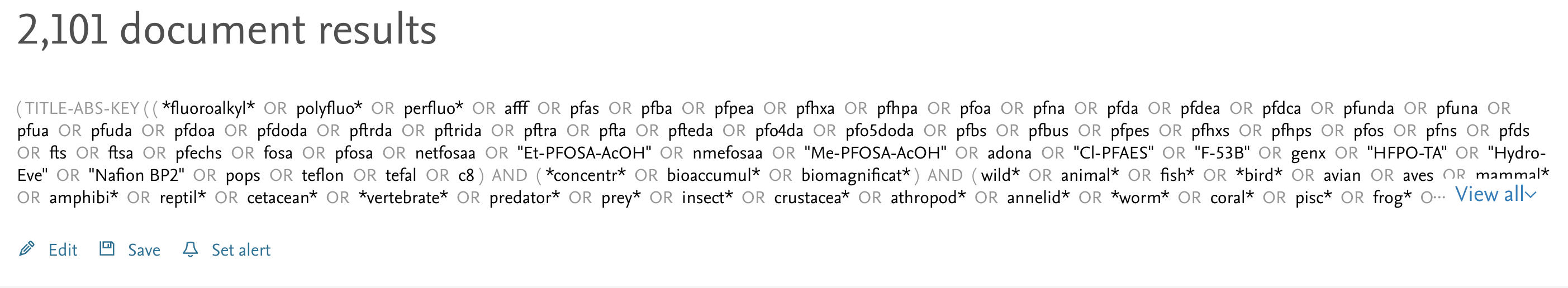

This search finds over 2,000 references:

When you go back to the main Advanced Search window (it is easiest by clicking on "Search" link in the top right of the results window), below the box for specifying the searches, there is a table displaying your search history. On the top right of the table, there is a small box, titled "Combine queries...", that allows to quickly combine searches (you could also do that manually in the search box, but this requires careful copying and pasting long search strings). It is easy to combine search numbers from search history (in this case searches #5 AND #6):

After pressing Enter the search is re-run with combined search strings:

When the number of returned record equals the number of papers in the benchmarking set (as in this example) - all benchmark papers were found by the search string being validated. If the number is less - some benchmark papers were not found. Although you do not have to aim for 100% search string sensitivity, it is desirable to be able to locate most of the benchmark papers, so carefully revise your string and benchmark it again.

Advantages of benchmarking using online database:

- quick and accurate, because databases use their internal records IDs to check overlaps of the lists of references, rather than trying to match titles

- easily works with searches that bring up very large numbers of references - no need to download large files with all the records (there are usually limits on downloads - 2,000 records fro Scopus, 1,000 for WoS, per download file)

- displays your search history, you can then export/copy as documentation of your benchmarking process (add your comments to this).

RSS Feed

RSS Feed